Stop blaming AI for things people choose to do

A few weeks into 2026, timelines on X flooded with sexually explicit deepfake images. Many were AI-generated depictions of real people, sometimes without consent, sometimes involving minors, created using xAI’s Grok.

The reaction wasn’t quiet.

Regulators launched investigations. The California Attorney General issued a cease-and-desist order demanding an end to nonconsensual deepfake outputs and threatening legal action if the company failed to comply.

Countries from Malaysia to Indonesia blocked Grok entirely over the risk of harmful content.

Governments in the U.K., EU, Canada, and beyond are examining legal compliance under online safety laws.

Platforms and journalists called for tighter restrictions. Opponents framed this as an AI problem.

AI tools becoming “child sexual abuse machines” without urgent action, warns our CEO Kerry Smith.

— Internet Watch Foundation (IWF) (@IWFhotline) January 16, 2026

2025 was the worst year on record for online child sexual abuse reports actioned by our analysts, with increasing levels of photo-realistic AI material contributing to the… pic.twitter.com/pfdaaiP8WS

But here’s the thing most people miss:

Grok didn’t choose to do any of this.

People did.

- Someone wrote those prompts.

- Someone asked for those images.

- Someone published the outputs.

And when the human is invisible, responsibility slips away, and the tool gets the blame.

We’re blaming the wrong thing

AI didn’t flood timelines with explicit images. People did.

Every harmful AI output starts the same way: a human makes a choice, types a prompt, and presses enter.

When that human is not clearly accountable, blame slides downstream from the person to the tool.

That’s why we hear headlines about “dangerous AI.” Not “dangerous use.”

Not “who did this.” But “AI did this.”

Why anonymity breaks accountability

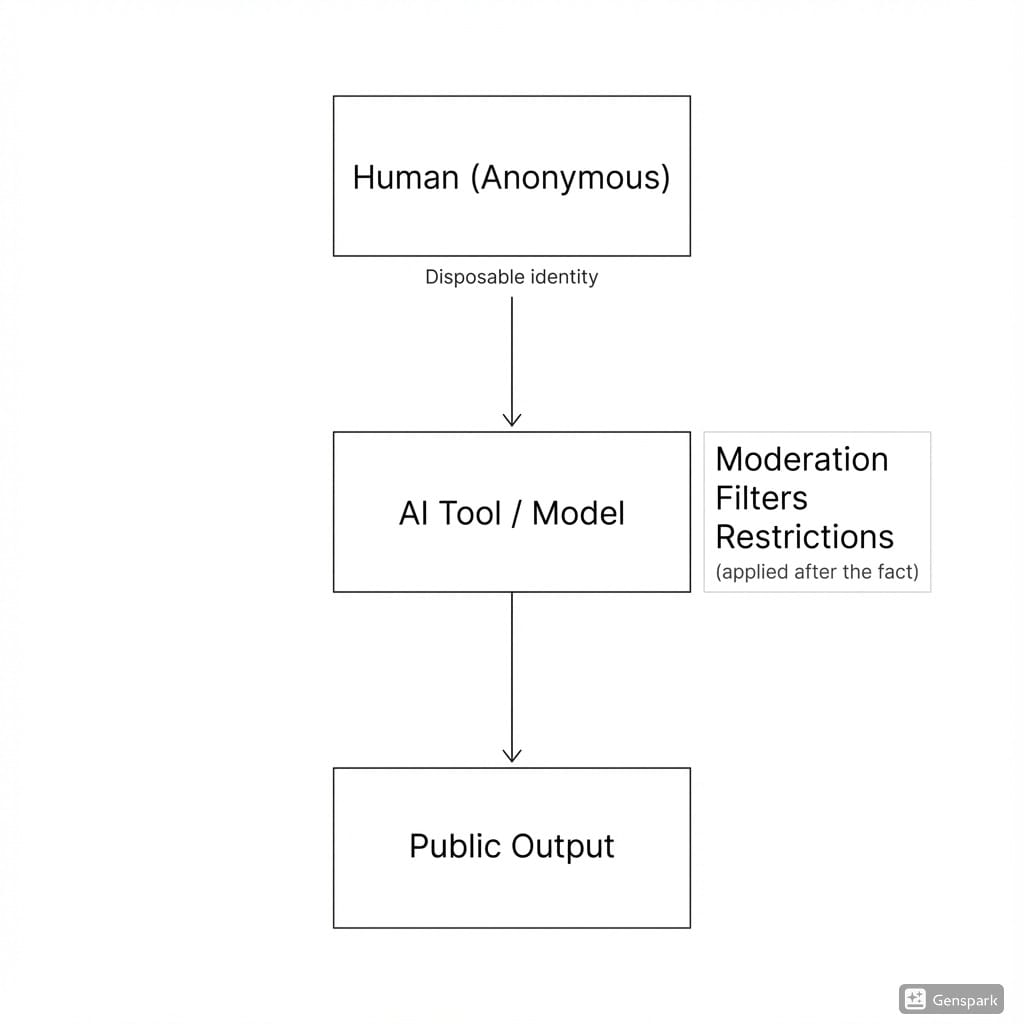

If AI keeps getting blamed for things humans choose to do, it’s not because people don’t understand how these systems work. It’s because anonymity removes the human from the line of responsibility.

When an action can’t be traced back to a real, accountable human, responsibility doesn’t disappear. It relocates.

And it almost always relocates to the tool.

When no one owns an action, the system owns the fallout.

If a user is anonymous and disposable, there’s no durable link between:

- the decision they made

- the harm it caused

- the consequences that follow

So platforms, moderators, and regulators step in. Not to correct behavior, but to limit capability.

That’s why the response to AI misuse rarely sounds like: “Who did this, and how do we stop them from doing it again?”

It sounds like: “How do we make sure the model can’t do this at all?”

Moderation fills the gap accountability leaves behind

When a system can’t reliably say “this action came from this human,” it defaults to broad controls:

- stricter filters

- reduced functionality

- overcorrection that affects everyone

This isn’t because platforms want to censor. It’s because anonymity forces them to manage risk at the wrong layer.

Behavior lives at the human layer. Enforcement happens at the model layer.

That mismatch is the real scaling problem.

Moderation works after something has already gone wrong. After an image is generated, posted, and spreads. At that point, the damage is already done.

So platforms respond the only way they can: by tightening rules around the tool itself.

More filters. More blocked prompts. More restrictions applied to everyone.

Wide. Imprecise. Expensive. And increasingly ineffective.

Elon Musk's X to block AI tool Grok from undressing images of real people after backlash https://t.co/rFIjiL386H

— BBC News (UK) (@BBCNews) January 15, 2026

Collective punishment is the result

Good users pay for bad behavior when identity is disposable.

Most people using AI tools aren’t trying to cause harm. They’re experimenting. Building. Creating.

But when misuse happens under anonymity, the response has to be global. Capabilities get removed. Outputs get watered down. Creative range shrinks.

Not because the majority misbehaved, but because the system can’t isolate responsibility.

And as long as humans can appear, misuse a tool, and disappear without friction, this pattern repeats.

Responsibility scales where moderation can’t

Responsibility works when it sticks.

When actions are tied to a persistent human identity:

- abuse becomes traceable

- patterns become visible

- consequences can be proportional

You don’t need perfect moderation. You don’t need omniscient AI safety.

You need authorship.

Right now, AI use is disposable by default.

- New account.

- New prompt.

- No memory.

Until that changes, moderation will always be reactive, and tools will keep getting blamed for human behavior.

The solution isn’t restriction. It’s authorship.

If moderation fails because it can’t isolate behavior, the obvious next move is restriction. Lock features behind subscriptions. Add friction. Raise the cost of misuse.

This helps. But it doesn’t solve the problem.

Even when AI image generation is limited to paid accounts, people create multiple accounts, rotate identities, and disappear and reappear without consequence.

The abuse doesn’t vanish. It just gets slightly more expensive.

Restrictions work only as long as users can’t walk around them. Right now, they can.

Because accounts aren’t people.

The missing link: one account, one real human

Accountability starts where duplication ends.

The fix isn’t harsher moderation or tighter filters. It’s making sure that:

- one human maps to one account

- actions persist across sessions

- misuse accumulates consequences

Not public identity. Not names. Not KYC.

Just uniqueness.

A way to know that when an action happens, it happened because this same human chose to do it.

How consequences work in practice

Accountability doesn’t require exposure. It requires memory.

Think about how other systems handle misuse:

- driving violations escalate when behavior repeats

- credit access changes based on long-term patterns

The power comes from persistence.

You can be pseudonymous. But you can’t reset history every time things go wrong.

Applied to AI use, that means repeated abuse leads to loss of privileges, and limits follow the human, not the account.

Why this protects AI instead of weakening it

When humans are accountable, tools don’t need to be neutered unless the tool is really at fault.

Platforms don’t have to overcorrect. Builders don’t lose capabilities. Good users aren’t punished for bad behavior they didn’t commit.

The system can finally answer the right question: Not “what should the AI be allowed to do?” But “who keeps choosing to misuse it?”

AI is a tool. Tools don’t change the world by themselves. People do.

— Hiten Shah (@hnshah) January 10, 2026

The takeaway

Here’s what matters:

- AI misuse is a human problem, not a model flaw

- Moderation fails without accountability, so tools get punished

- Persistent identity fixes this, without neutering AI

AI doesn’t need to be moral. It needs moral authors.

Because tools don’t post content. Models don’t choose harm. Systems don’t decide intent. People do.

And until we design AI systems that remember who, we’ll keep punishing what

If AI is going to operate in public spaces, someone has to stand behind the action.

Right now, no one does.

Do you think AI platforms should tie actions to unique humans, or is that a line we shouldn’t cross?